platform for virtual reality. The study area is the campus of

Henan Institute of Urban Construction, Pingdingshan, China,

with an area of 1,066,667m 2, ranging from 33°46′11″N to

33°46′42″N, 113°10′52″E to 113°11′36″E.

FRAMEWORK AND IMPLEMENTATION

A. Framework

Browser-Server three-tier architecture VR-based

framework is designed in the research area and including 3

layers: presentation layer, logic layer and data layer [2].

1) Presentation layer is mainly used for interface display

and to achieve the user's query.

2) Logic layer consists of web server and application

server.

3) Data layer includes the related spatial data and

attribute data, such as DEM data.

B. Implementation

Overall, there are two kinds of works: one is the works

outside unity3D, including models, animations, textures,

sounds and GUI graphics; the other is the works in unity3D,

such as levels, scripts, physics, materials, shaders and GUI.

Figure 2 shows the works outside Unity3D and the works in

unity3D.

III. WORKS OUTSIDE UNITY3D

A. Data Collection

There are two kinds of data we need in the study: spatial

data and attribute data. The data was collected from three

sources:

• The terrain maps which scale is 1:500, obtained by

GPS.

• Two-dimensional maps of the study area including

architectural design maps and design sketch that the

research area previously saved.

• Texture of roads, lawns, trees, public facilities,

buildings taken by camera from different angles.

B. Modeling

The virtual reality environment consists of roads, lights,

traffic symbols, trees, buildings and so on. It is essential to

build fine, sophisticated models of every real object which will

be placed in a virtual field. The precise geographic location,

attribute information and models of geography entities are

needed for achieving good quality objects of scenes.

C. Export

When the modeling is completed, the 3D models are saved

as the format .sbx so as to compatible with Unity3D.

Animations, textures, scripts, and sounds are saved in the asset

file in a Unity3D project; they are

IV. WORKS IN UNITY3D

Unity3D has a highly optimized graphics pipeline for both

DirectX and OpenGL. Animated meshes, particle systems,

advanced lighting and shadows, all run blazing fast. It also

support individual operations for we can create rain, sparks,

dust trails, anything that we can imagine. During the process of

creating a scene, works in Unity3D are as follows:

A. Shading

Unity3D comes with 40 shaders ranging from the simple

(Diffuse, Glossy, etc.) to the very advanced (Self Illuminated

Bumped Specular, etc.) . It achieves a more realistic scenario

results by using the shaders.

B. Programming...

C. Collision Detection ...

D. Showing Information by GUI Control ...

E. Publishing...

Note:

I have not talked about the lines above because I did not found it necessary.

...

F. Others

Sounds, animations, videos will be added in Unity3D by

GameObjects. Thus really enhance the user's immersion in the

virtual reality system [8].

G. Integrating

When all the works above are completed, we save the

scenes, scripts, audios, attribute data, pictures, textures etc. in a

Project in Unity3D.

VI. CONCLUSION

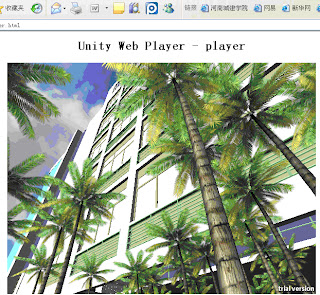

This study generates a virtual reality system based on

Unity3D for taking a campus as an example. The virtual reality

system is highly vivid, strongly interactive. Users can

download a Plug-in to browse the study area online. With a full

range of personalized mode on operation, they can choose their

own way to browse and participate in the virtual reality, and

give full play to their imagination according to their own

wishes without affecting the others by using the designated

keys on the keyboard. The scene keeps on updating 60 times

per second, users will subconsciously input and immediately

immersed in the virtual scene for spontaneous exploration and

observation. It shows that Unity3D not only can serve for game

development, but also can be used in real world. The virtual

reality system can also query information, about the research

area, and do some simple spatial analysis such as illumination

analysis and distance measurement.

In the area of geographic information system, there are

always two methods to get 3D virtual reality, one is to use a 2D

professional platform such as ArcGIS software to get the

virtual reality by secondary development, the other is to use a

3D or 2.5D software as a platform for development, for

example Skyline software.

This is only the preliminary results of our study. The next

step is to Co-GIS software with the system together, so that it

will possess the sophisticated spatial analysis functions of GIS.

This will not only increase the function of the virtual reality

system, but also provide new ideas about the development of

3DGIS.

References:

This paper appears in:

Date of Conference:

18-20 June 2010

Author(s):

Sa Wang

Key Lab. of Resource Environ. & GIS, Capital Normal Univ., Beijing, China